AI Style Finetuning with SDXL

Explore AI art evolution through Stable Diffusion model finetuning. This case study reveals how to overcome generic model limitations, create unique visual styles, and simplify prompting. Learn about LoRA adaptation, synthetic datasets, and practical applications in generative art. Ideal for AI enthusiasts and digital artists pushing creative boundaries.

After months of intensive work with Stable Diffusion AI models and workflows, I've experienced several limitations in the "out-of-the-box" models (i.e. - pre-trained Stable Diffusion models without additional training such as SDXL Base):

Generic outputs lacking distinctive character

Inability to incorporate new artistic styles

Need for complex "prompt acrobatics" to achieve specific results

These challenges sparked my interest in model finetuning as a way to enhance creative output in Generative Art. To explore its potential and gain practical understanding, I embarked on a project to rapidly develop three finetuned models, each aimed at capturing a unique visual style.

This hands-on approach allowed me to dive deep into the finetuning process, uncovering its practical applications and potential for pushing the boundaries of AI-generated art.

My Goals

Use finetuning to accelerate creative output

Identify what makes a well-tuned model tick

Navigate the tradeoffs in model adjustment

Explore finetuning using synthetic data

The Process

I focused on finetuning three core style models using the LoRA (Low-Rank Adaptation) approach. This method let me adapt pre-trained models while maintaining their general knowledge, resulting in a model that is both generally adaptable but specifically smart about the style I trained it in.

I started by creating synthetic datasets using Midjourney, doing a lot of iterative prompt design to curate collections that I felt expressed different art-directed styles.

What I Learned

The crucial role of high-quality, curated datasets

The delicate balance in model tuning

The exciting potential for specialized artistic models

Key Wins

The finetuned models show great potential in creating visuals that hit specific creative goals, especially in applying novel artistic styles.

Better control over style

Less need for complex prompting

More consistent aesthetics

The Results

Overall, I consider the trainings a success. When using the trigger token correctly in the prompt “in the style of TOK” the models exhibit strong stylistic differences reflective of their training. The models also benefit from simplified prompting. If you are using the models for the style they’ve been trained on, you don’t really need to describe style at all. Additionally, the models exhibit interesting nuances when responding to generic prompts.

Prompt: a man thinking

Prompt: a happy woman drinking coffee

Prompt: an hourglass with sand flowing

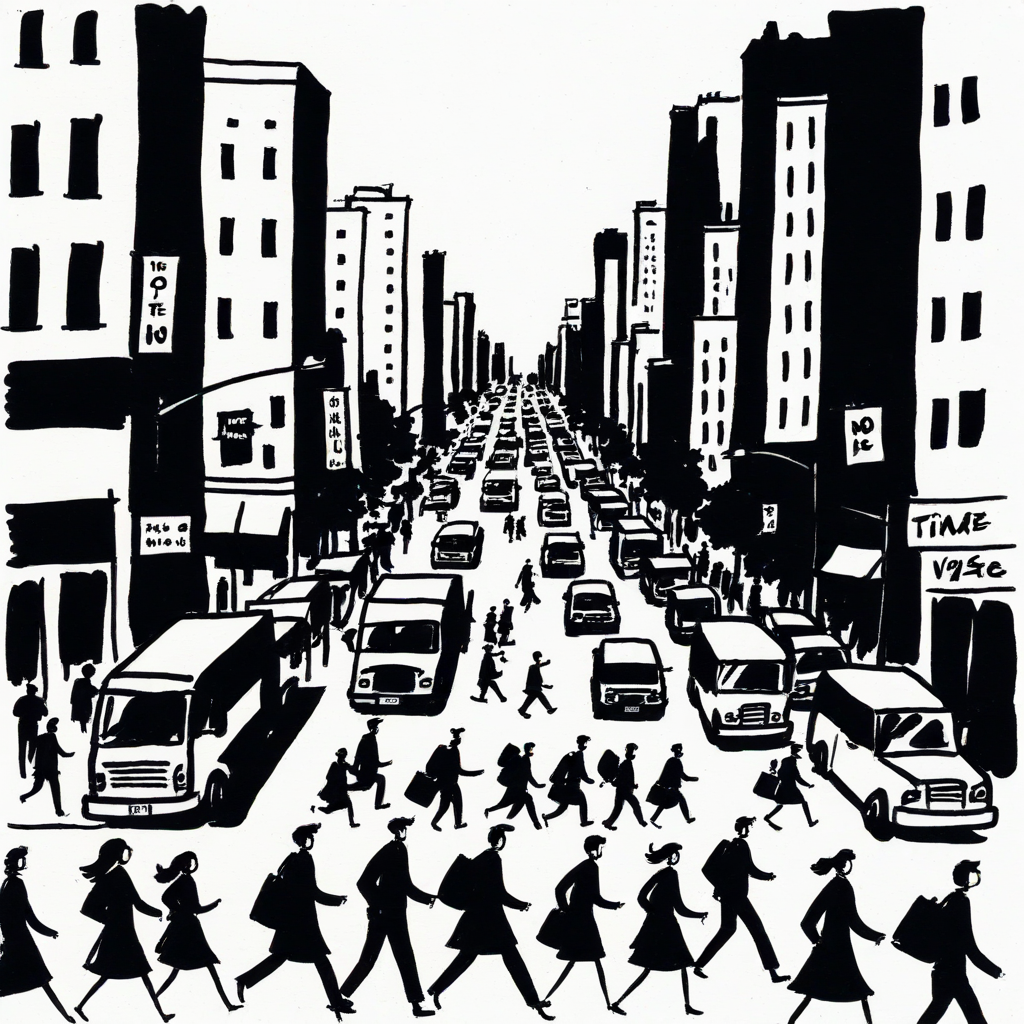

Prompt: a busy city street with people rushing, illustrating the saying that time waits for no one

Frequently Asked Questions

-

TOK is a special trigger word that is used in the training to associate the style you have trained with something you can include in the prompt. If you run the model without the trigger word in the prompt, you won't really activate the creative style you trained into the model's knowledgebase. To make it more confusing, TOK is really just a random choice, with the unique benefit of being, well, unique. The model doesn't have much of anything associated with TOK, so it is a good candidate for a trigger word. When you train the model, you can choose any trigger word your heart desires. There's also a lot more detail I could go into with captioning data for training, but that will have to be its own article.

-

I recently started exploring Replicate to host my fine tuned models, and along the way discovered that Replicate has some handy and efficient ways to train models using their machines in the cloud. To run the trainings for these models, I used the Replicate Training API which I accessed via a Google CoLab notebook that someone on the replicate team made (thank you!). This proved to be an extremely efficient way to fine tune models, but with a few important trade offs:

You have to send your data to a cloud server. I believe that Replicate provides a secure and private environment, but realistically this will be a non-starter for certain clients. I’m my case, this is not client work, so no biggie for me.

The training process is dramatically simplified by the behind-the-scenes training parameters being preset by Replicate, but you lose a lot of control in the choices you can make about how you train the model such as what optimizer you want use.

Replicate also includes a handy guide to help you get started when you want to Fine tune SDXL with your own images, as well as a great interface for using your model after training. I’ve been extremely impressed by the Replicate team and platform, they are definitely ones to watch in this space. -

In the past I have used the open source Kohya_SS framework to run my model tunings. This has the benefit of running locally on your machine, so when working with sensitive client materials there are advantages to this approach which allows you to maintain tight control on where data gets sent. But it is also much more complicated, as you must take full control over every training parameter, which is both awesome but daunting. Model finetuning is not an exact science, and for every unique dataset, there are likely parameter difference that would improve the performance of the model. You also want to be comfortable using command line tools (CLI) for this. Or just have ChatGPT handy to explain stuff to you.

-

To assess the performance of finetuned models, we employ a multi-faceted approach:

Visual Evaluation: We generate a diverse set of images and assess them for style consistency, quality, and adherence to the intended aesthetic.

Prompt Sensitivity: We test the model's responsiveness to minor prompt variations to gauge its stability and flexibility.

Comparative Analysis: We compare outputs with those of the base model to measure the impact of finetuning.

User Feedback: We collect opinions from artists and designers to evaluate the model's practical utility.

Computational Metrics: We analyze parameters like loss curves during training and inference times to ensure efficiency.

-

Synthetic data refers to data created by AI that is used in the training of new AI models. The LoRA models I created used images generated with an AI system (Midjourney) to create the dataset used for training.

Model Details

Zine Style Finetune

An SDXL model finetuned on Zine Style Images using a custom dataset of 50 images that I created using Midjourney.

You can try the Zine Style model on Replicate HERE

Zine Style Model Outputs Gallery

Marker Style Finetune

An SDXL model Finetuned for style on Marker-Style Sketches using a custom dataset of 50 images that I created using Midjourney.

You can try the Marker Style model on Replicate HERE

Marker Style Model Outputs Gallery

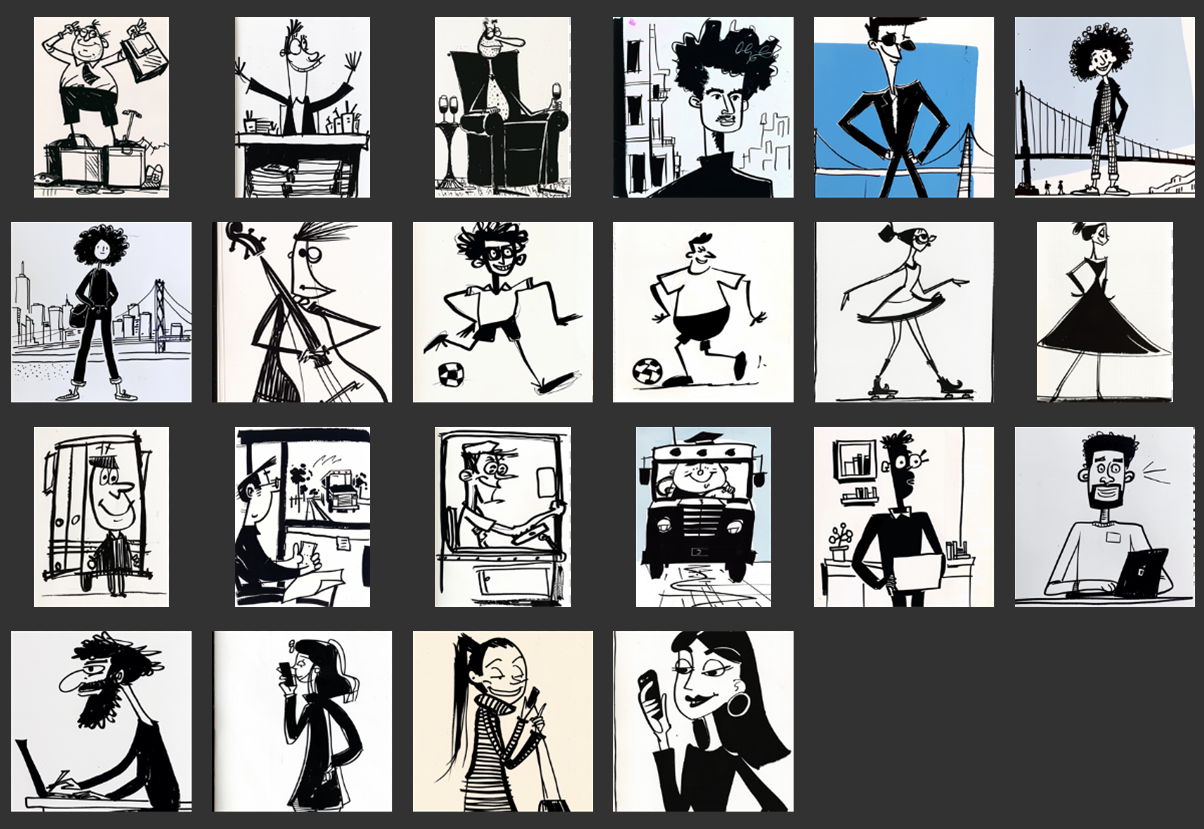

Editorial Cartoon Style Finetune

An SDXL model Finetuned for style on a synthetic dataset of 24 Stylized Illustrations created using Midjourney.

Editorial Cartoon Model Outputs Gallery

Additional Model Comparisons

Here are some more comparisons showing how these models perform when given the same prompt (and same seed).

Prompt:

In the style of TOK, a vast network of interconnected digital avatars, symbolizing the widespread usage and enduring presence of MMAcevedo across various research fields, juxtaposed with a lone figure expressing regret in a faded digital landscape, representing the complexities of legacy and consent in a world where uploaded consciousness thrives

Zine Style

Marker Style

Editorial Cartoon

Prompt: in the style of TOK, a person holding an umbrella while walking in the rain, illustrating the idea of being prepared for life's challenges

Zine Style

Marker Style

Editorial Cartoon

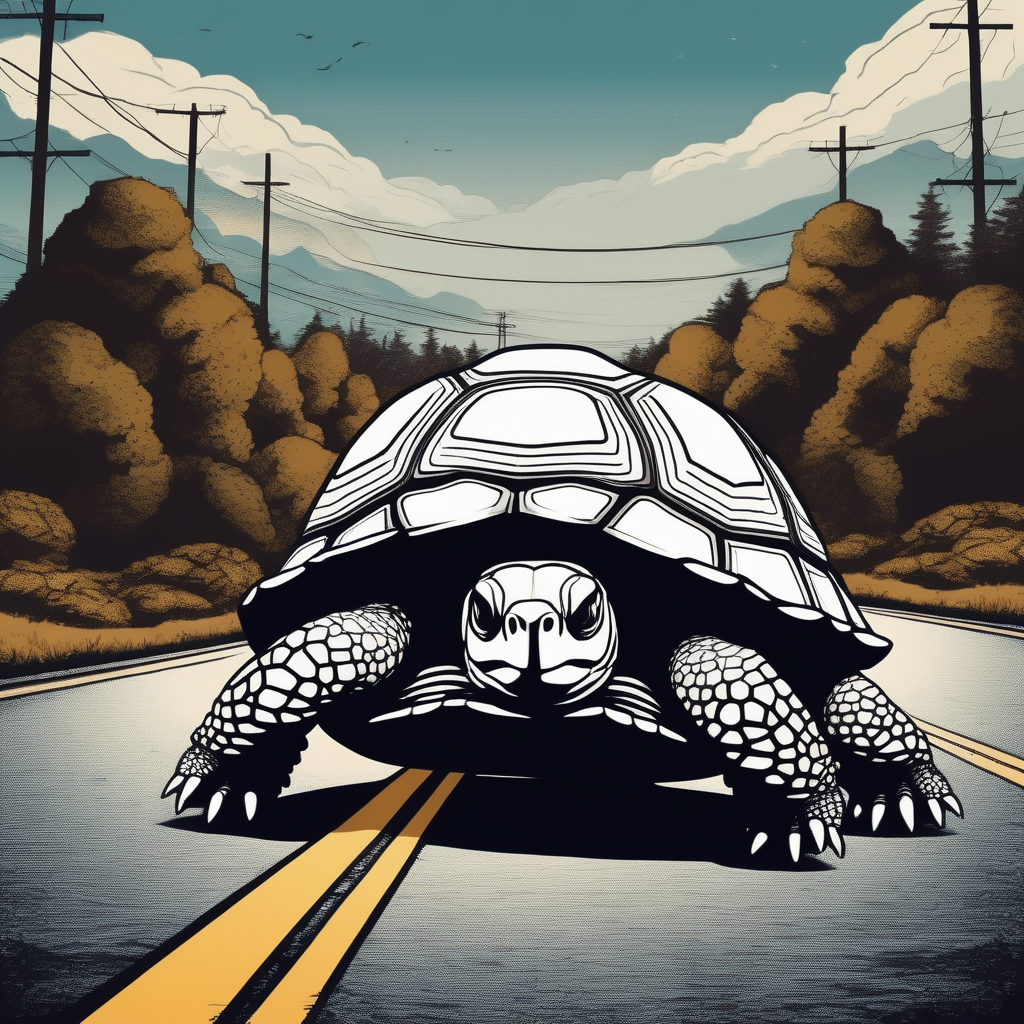

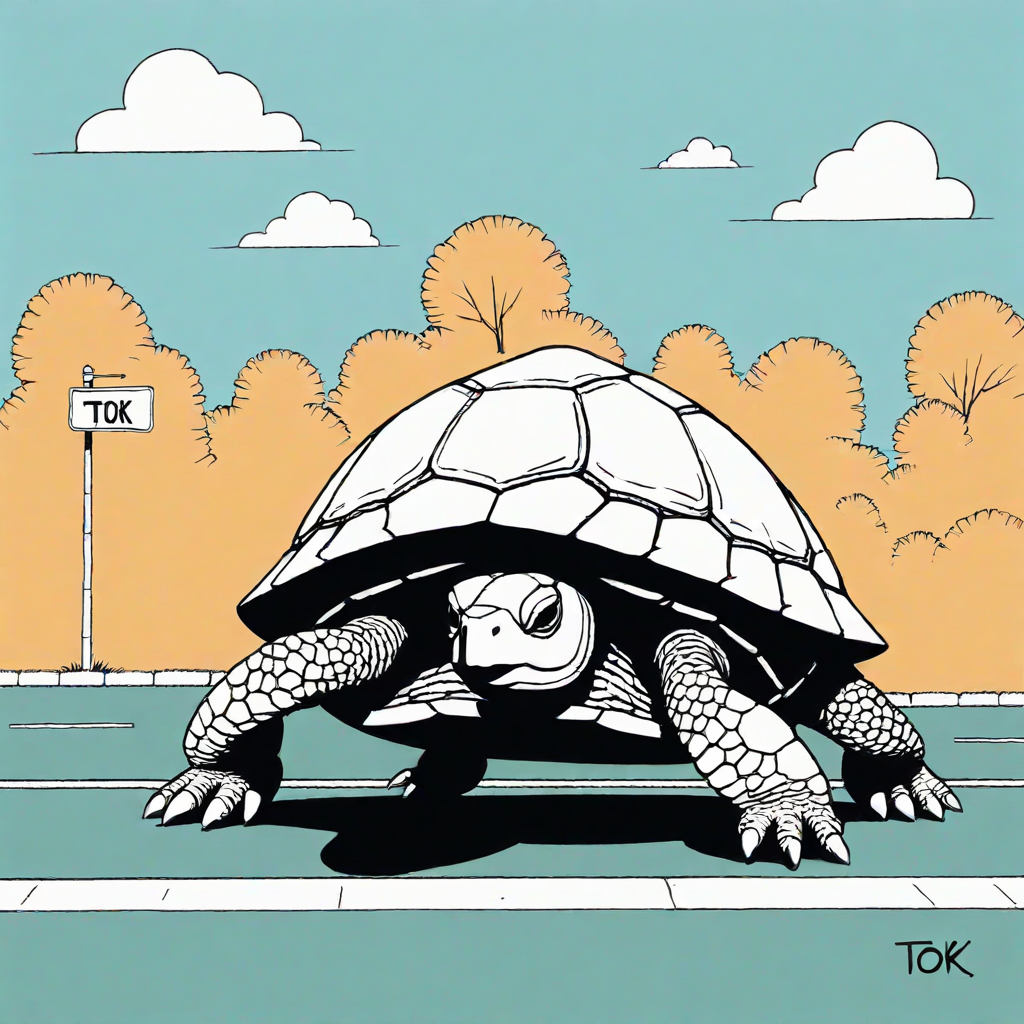

Prompt: in the style of TOK, a turtle slowly crossing a road, symbolizing the importance of patience and taking your time

Zine Style

Marker Style

Editorial Cartoon